The Downfall of GeeksForGeeks

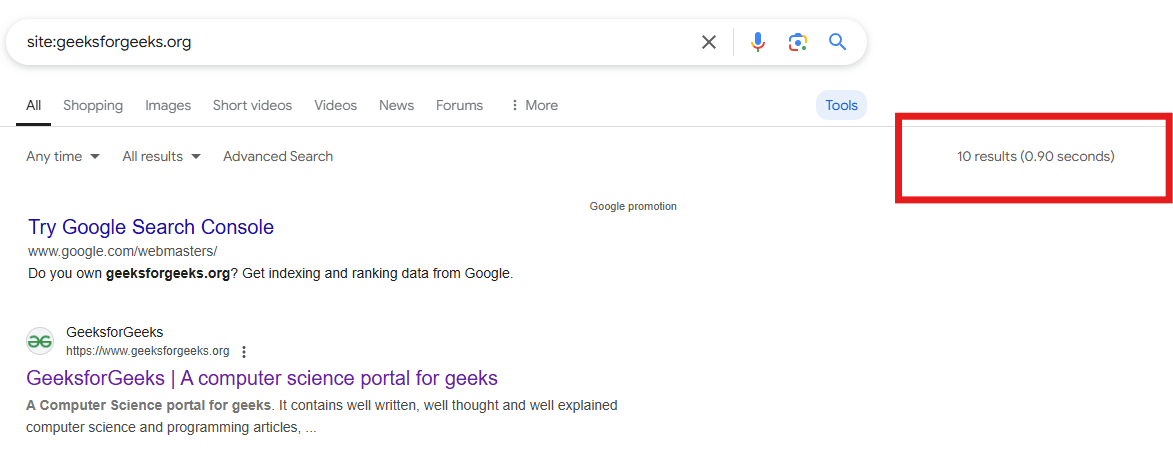

Geeksforgeeks - a well-known educational website got de-indexed from SERPs! Thousands of pages literally gone! A simple site: operator check will return 10 URLs,

Why is this big news?

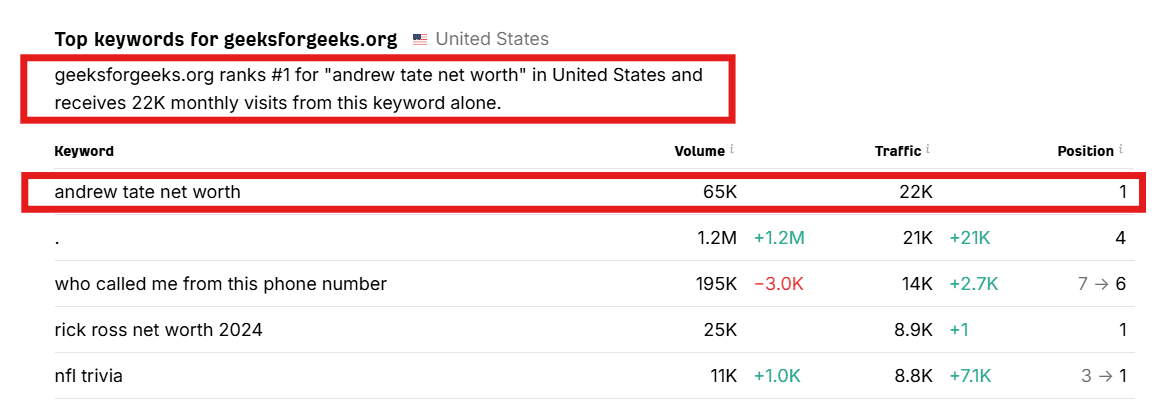

Here's some stats about the website, so you can understand the magnitude of the loss and type of authority this website had in search before losing it's crown.

Imagine all of this...GONE!

The subdomains

If you search for brand name "geeks for geeks", the results is links to their socials and such, but you will not see a single URL from the website nor any of its subdomains. Aren't subdomains treated as separated entities and they don't impact the main domain as Google stated over and over?

I could see the subdomains in SERPs a few days ago with the site operator. Now gone.

I know that some people claim that the Google API Leaks suggested that subdomains are often considered part of the main domain and therefore can influence the overall ranking of the primary domain and vice versa.

We also know the Monster.com case study when they moved their content from a subdomain to a subfolder and subsequently saw a 116% increase in search visibility.

Takeaway: IMO I think Google considers there's a type of relation that can impact rankings between the main domain and the subdomains, but it's not straightforward. So for example, if you're main domain or subdomains are leveraging spammy tactics, they can impact each others' visibility in search. At least according to what we can see with Geeks for Geeks

The main straw

Your website is ranking in Google for a reason, and a big part of this reason is your topical authority and "relevance" to a specific topic. Geeks for geeks started branching out and posting highly irrelevant topics on their website. Most SEOs think this is the main cause of this downfall.

I like this quote from Live Breathe SEO: "You can’t teach algorithms to code and also gossip about celebrities on the same domain. Not without consequences. Google’s manual action cited “thin content.” But the real issue? Topical drift. A failure of focus."

HOWEVER,,,,

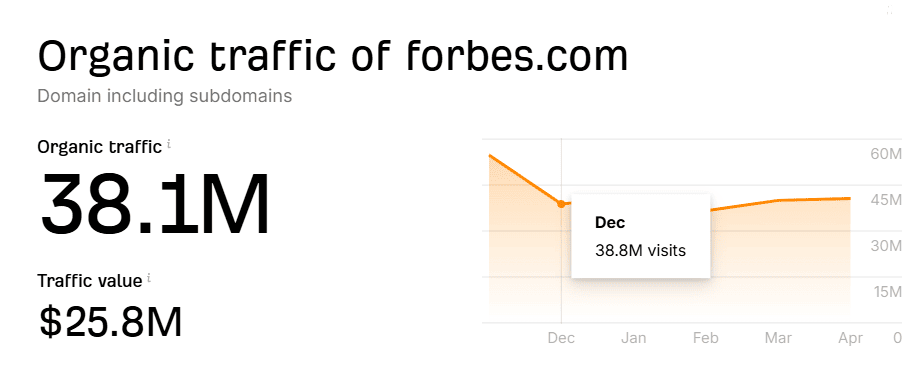

We all still remember how Google served forbes.com/advisor/ folder a manual action back in November for site reputation abuse. Yes, the folder only, not the entire website. You can see in the screenshot below how traffic declined from Nov to December and millions of clicks were lost.

Why would Google punish geeksforgeeks entire website vs. forbes only getting a folder-level manual action? I mean, Forbes Advisor simply switched subfolders after getting hit the manual penalty and they are on the path of getting their traffic back (worth millions of dollars per month)....

Site reputation abuse vs. thin content penalties:

- Site reputation abuse as per Google search central blog: is the practice of publishing third-party pages on a site in an attempt to abuse search rankings by taking advantage of the host site's ranking signals.

- Thin content as per Google support page on manual actions: Google has detected low-quality pages or shallow pages on your site. Here are a few common examples of pages that often have thin content with little or no added value.

Takeaway: The reason Forbes got a folder-level manual action vs the entire website is that "Google has detected that a portion of the site is violating the spam policy" but in Geeks for geeks case, the problematic content was not isolated in a specific folder or subdomain, and therefore Google had to penalize the entire website. If you look at the website URL structure, it's flat, with many of their content and guides nested directly under the homepage and not a specific subfolder. Here are some examples:

- geeksforgeeks.org/google-search-console-guide

- geeksforgeeks.org/hashing-data-structure

- geeksforgeeks.org/introduction-to-splay-tree-data-structure

A content issue that was spread out on the website, that's why the entire website was penalized!

*Irrelevant comment, I think they are using UTM parameters on the top navigation menu links, which is not a good practice.

Backlinks, they say

Some may look at the backlinks profile and anchor text of geeksforgeeks.org and give it partial blame. I don't think this is the main factor, or a strong factor.

It is not uncommon for large popular websites to attract random spammy backlinks, and we've learned over the years that Google just got better in ignoring them.

Connecting the Dots

There's more to SEO than meets the eye and I guess the key lessons here are:

- I don't think the problem was the idea of expanding to more topics. Let's say you're a health website, and decided to expand to beauty. The topics seem as sisters and make sense, but that was not the type of content expansion that geeksforgeeks.org went for and thus the penalty.

- Your URL structure matter more than you think, it can be the difference between getting a full website manual action vs folder-level penalty. If you want to try new topics or test things, let's try and do that in isolation from the rest of the content on the website by either using a specific subfolder or a subdomain.

- Keep an eye on your subdomains, while they seem to be treated as sperate entities most of the time, spammy practices seem to have wider impact.

That's that for today folks and see you next newsletter!

The SEO Riddler Newsletter

Join the newsletter to receive the latest updates in your inbox.