Calculating SERPs Compression Ratio With Python

I love how this community builds on top of the work of each other! Well this article is no different from that. It all started with Roger Montti publishing a post about "How Compression Can Be Used To Detect Low Quality Pages" on Search Engine Journal. Next thing Chris Long posted on LinkedIn how Dan Hinckley from the GoFish Digital team built a Python script to calculate the compression ratio of a page! Cool stuff right?

💡 🚀 Ready to boost your SEO with Python? Join my hands-on training designed for SEO professionals! Learn to automate tasks and analyze data easily. Don't miss out—start your journey today! Learn more here. Also I finally soft-launched the SEO Strategy Course at 50% Off for a limited time, lock the offer here!

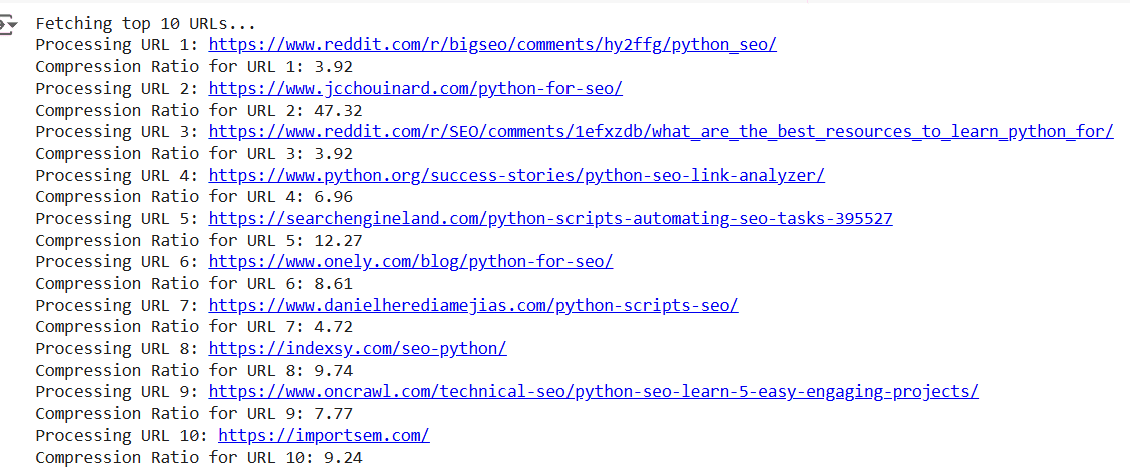

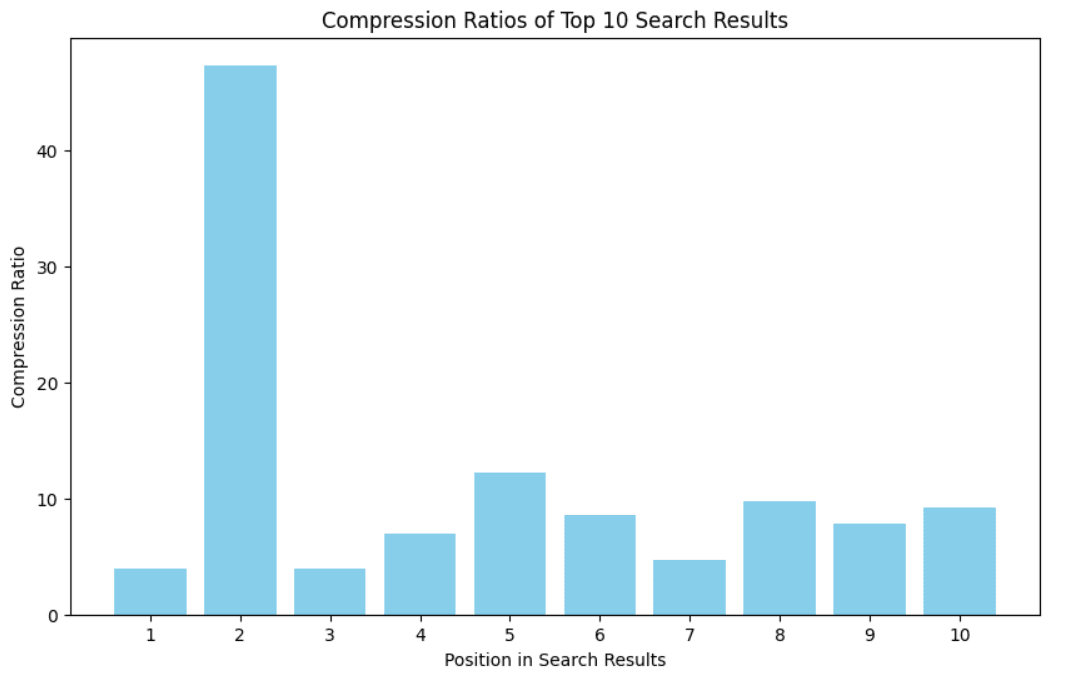

Inspired by their work, I decided to create my own Python script. This script takes a keyword, pulls the URLs of the top 10 search results for that keyword, retrieves their content, and calculates the compression ratio for each page. I was curious to see if there’s any relationship between compression ratio and page rankings.

The SEO Riddler Newsletter

Join the newsletter to receive the latest updates in your inbox.

For this experiment, I chose the keyword "Python for SEO." Curious to see the results? Here’s what I found:

While this example isn’t conclusive or sufficient to draw any firm conclusions, it’s an intriguing starting point. It’s definitely worth exploring further.

Another thing to keep in mind, this is only ONE potential element out of many others that may or may not impact rankings so don’t expect the relationship to be linear or straightforward.

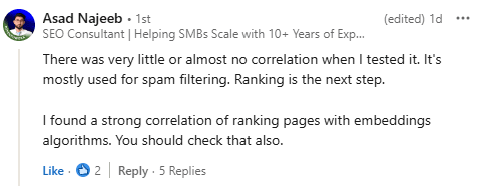

I also received this interesting comment from Asad Najeeb on my LinkedIn post, which makes sense. There maybe no direct relation between ranking and compression ratio, and this is just used for spam filtering, which is BEFORE ranking is decided

However I always like to try things and test things out so took this to the next level and decided to create ANOTHER Python script that takes a csv file of URLs and their average rankings, calculate the compression ratio of each, and then plots some graphs to demonstrate the connection - more cool stuff right? 😄

Ok before we jump into scripts and graphs, here's a quick background about compression ratio.

What is Compression Ratio?

Compression ratio in SEO is the measure between compressed text size and the original text size of a page. One of the common compression techniques is Gzip which works by identifying repetition in content and eliminating it.

Why are we talking about compression ratio?

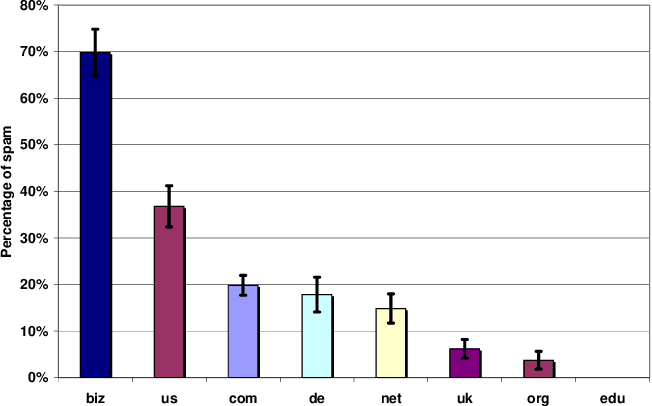

Google employs various systems to evaluate webpage quality. One potential way to detect low-quality or spammy content is through its compression ratio. Pages with high compression ratios may have a lot of redundant or low-value content, which could signal spam.

As Roger Montti points out, “High compressibility correlates to spam.” However, there’s always a margin for error—some high-compression pages might be flagged as spam even if they’re not.

What does the data say?

There's a research paper titled "Detecting spam web pages through content analysis" that shows some interesting analysis. For example, for the dataset they analyzed they found the found the following Spam occurrence per top-level domain:

And while we know that Google does not explicitly give preference to .org or .edu domains for backlinks (or so they say 😄). However, these domains often have characteristics that make them high-quality link sources [read more on this here]. So the above chart aligns with this narrative.

So what to do with all of this information - LOL?

Building on this, I also created another python script that take a csv of urls, their average ranking (you can add ranking for main keyword only if you prefer), calculated the compression ratio for each of the URLs and outputs some graphs to have a look at the bigger picture.

Checkout My Python Training! [ZERO Coding Experience Needed]

Want to give it a try? Here's the code in Google Colab - Feel free to reach out on LinkedIn if you run through any challenges. Make sure that the CSV has 2 columns titled url, and ranking (in small case).

Practical Applications for Compression Ratio Analysis

So, what’s the value of compression ratio analysis?

Spam Updates & HCU Recovery:

If your site has been impacted by a spam update or Helpful Content Update (HCU), analyzing compression ratios could uncover patterns worth addressing - I mean why not?Content Quality Audits:

Use it as part of a broader content audit to identify pages with high redundancy, which may need optimization.SEO Experimentation:

This analysis is just one piece of the SEO puzzle. Combine it with other metrics like embeddings or link profiles for a more comprehensive view. Maybe something I may tackle in upcoming newsletters!

Final Thoughts

While the relationship between compression ratio and rankings may not be direct or linear, it’s an interesting area to explore. I always enjoy testing new ideas and learning from them. If you decide to experiment with this, I’d love to hear your findings!

Ready to Give Python A Try? Learn More